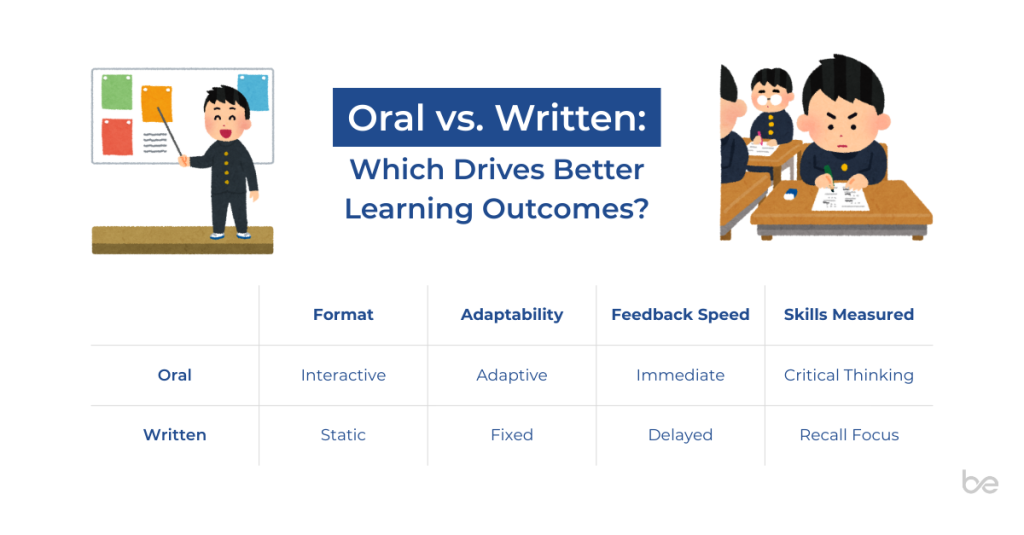

Oral examinations and in-class recitations have emerged as powerful pedagogical tools, often outperforming traditional written assessments in evaluating both academic mastery and real-world competencies. Research indicates that oral assessment formats yield significant learning gains, with students demonstrating improved performance and deeper retention compared to written alternatives (Lyster & Saito, 2010; Hattie, 2009).

Meta-analyses also show that oral feedback can achieve effect sizes approaching 0.87 in certain contexts—particularly language learning—substantially higher than peer feedback (0.58) and computer-based feedback (0.38) (Lyster & Saito, 2010; Hattie, 2009). This advantage comes from the unique ability of oral assessments to support real-time interaction, immediate clarification, and adaptive questioning that responds to the student’s thought process.

The COVID-19 pandemic further accelerated this shift, with virtual oral assessment implementations—such as Qpercom’s bespoke platform for remote OSCEs—reporting 84% cost reductions while maintaining assessment validity (Qpercom, 2021).

Contemporary frameworks now combine constructivist learning theory, AI-powered platforms, and inclusive design principles to create assessment methods that simultaneously measure and enhance student learning. The following sections explore the evidence-based approaches educators can use across disciplines—many of which inform how better-ed is designed for maximum impact.

Theoretical Foundations That Drive Effective Oral Assessment

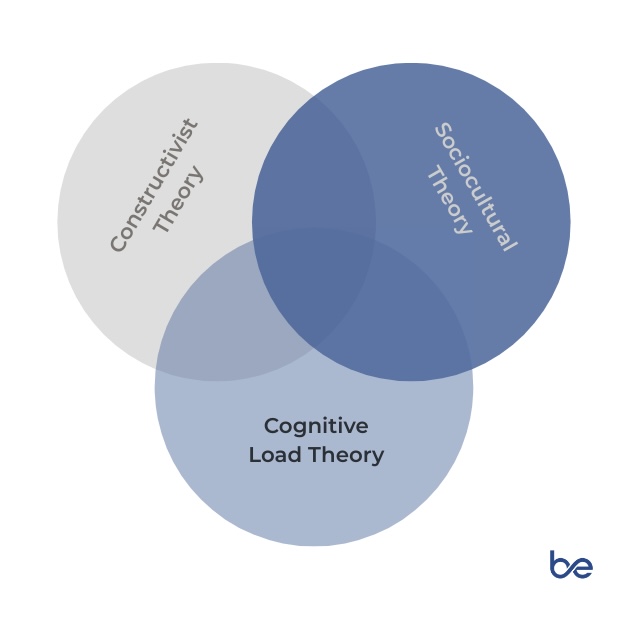

Three theoretical frameworks underpin the success of oral assessment:

1. Constructivist Learning Theory

Frames oral assessment as active knowledge construction, where students articulate understanding and engage in meaning-making through dialogue with an examiner (Vygotsky, 1978).

2. Sociocultural Theory & Zone of Proximal Development

Suggests oral exams naturally operate in an optimal learning space where scaffolding supports students just beyond their independent capability.

3. Cognitive Load Theory

Indicates that oral formats—when well designed—can reduce extraneous load by eliminating complex text-processing demands, freeing mental resources for higher-order reasoning (Sweller, 2011).

These foundations converge in authentic assessment frameworks like the Six-Dimensional Framework, which examines content type, interaction patterns, authenticity, structure, examiner roles, and oral language considerations.

Ensuring Reliability in Oral Assessment

Subjectivity in oral exams can be mitigated through structured approaches:

- Structured Oral Examinations (SOEs) standardize questions and employ calibrated scoring rubrics, demonstrating inter-rater reliability coefficients (ICC) above 0.80 in medical and dental education (BMC Med Educ, 2016).

- Reliability thresholds classify 0.60–0.74 as good and 0.75+ as excellent (Cicchetti, 1994).

- Correlation studies show moderate positive relationships between oral assessments and other evaluation formats, including MCQs (0.48–0.52), OSCE performance (0.48–0.51), and written exams (0.44) (Sulistiani et al., 2023; Ali et al., 2016).

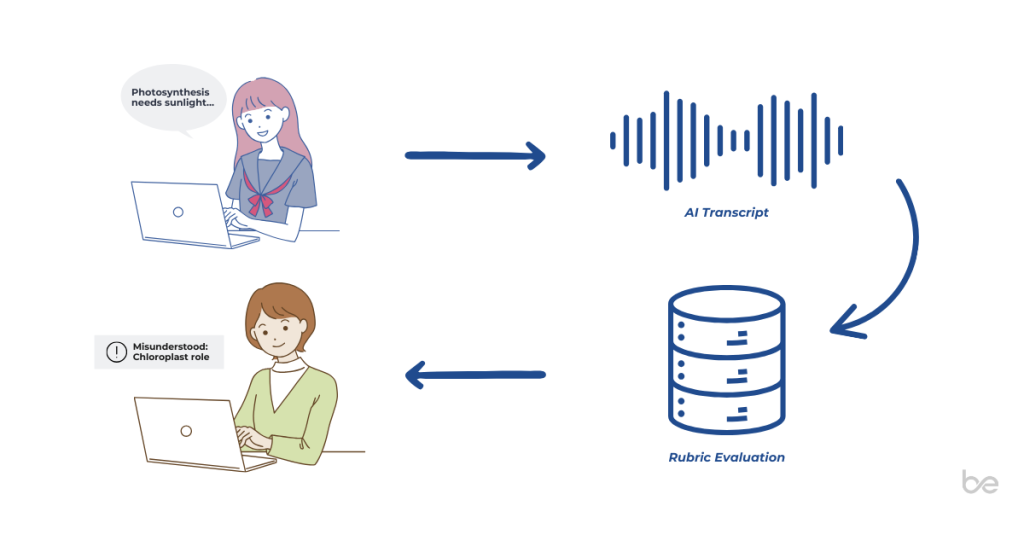

better-ed integrates these reliability principles by supporting rubric-driven evaluation with AI-assisted transcript analysis—reducing variance while retaining the benefits of live conversation.

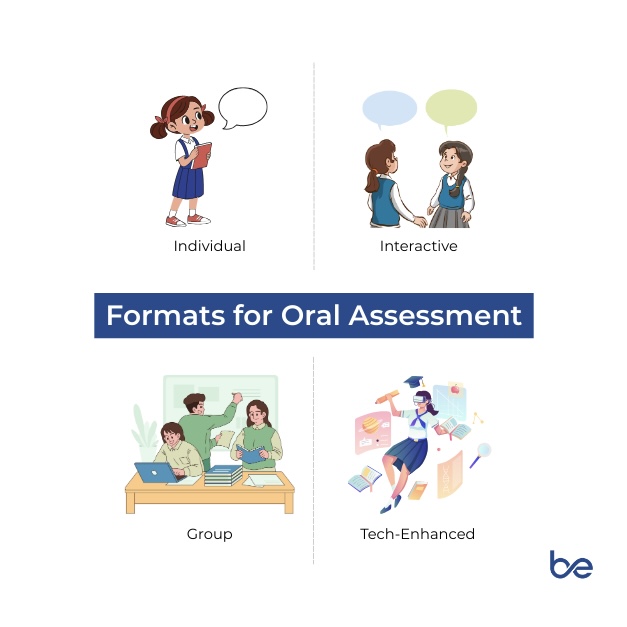

Choosing the Right Oral Assessment Format

Different formats serve different learning objectives:

- Individual Oral Examinations — Rigorous depth evaluation, ideal for professional competency assessment.

- Interactive Oral Assessments — Replicate workplace collaboration, improving higher-order thinking and communication skills.

- Group Oral Presentations — Assess both individual accountability and teamwork skills.

- Technology-Enhanced Formats — AI and VR assessments are expanding; VR immersion has a 0.851 correlation with improved language learning outcomes (Yang et al., 2021).

better-ed enables educators to select the most effective format for their goals, from one-on-one dialogues to collaborative oral problem-solving.

Reducing Anxiety and Improving Inclusivity

Oral assessment anxiety is a known challenge. However, it can be mitigated with:

- Clear expectations and practice opportunities

- Structured rubrics and recorded exemplars

- Supportive environments with “no put-downs” policies (Horwitz et al., 1986)

Inclusivity considerations:

- Cultural norms around eye contact and authority (Brown & Abeywickrama, 2010)

- Accessibility accommodations (assistive tech, extended time)

- English Language Learners’ focused evaluation on content, not language alone

better-ed supports inclusivity with voice input that focuses on conceptual understanding, adjustable pacing, and multilingual AI feedback.

Leveraging Technology for Scale and Quality

AI-powered oral assessment tools enable scalable, authentic questioning while preserving integrity. Platforms like Qpercom Observe demonstrate significant efficiency gains: 70% reduction in administration time and 84% cost savings (Qpercom, 2021).

VR and AI integration can extend benefits beyond scale—offering immersive, low-risk environments for practicing professional discourse (Yang et al., 2021). better-ed leverages AI for real-time analysis and VR-readiness for future-proofing its assessment delivery.

Aligning Oral Assessment with Learning Objectives

Integration with Bloom’s Taxonomy ensures higher-order skills are targeted. Authentic assessments increase motivation and engagement, especially when mirroring workplace communication (Wiggins, 1998).

Formative assessment integration—where assessment doubles as learning—improves metacognition and skill transfer (Black & Wiliam, 1998).

better-ed’s adaptive questioning ensures assessments align with both curriculum goals and real-world competencies.

Maintaining Quality Through Continuous Improvement

Fairness requires:

- Examiner training and calibration

- Statistical monitoring for bias

- Transparent appeals processes (BMC Med Educ, 2016)

better-ed’s analytics track reliability metrics and support iterative improvement.

Why better-ed Works This Way

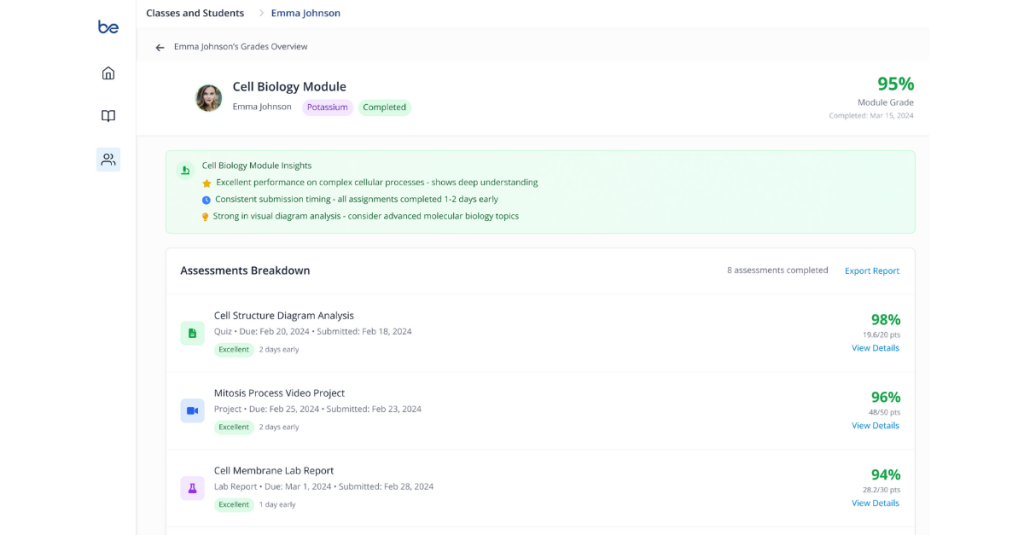

better-ed’s voice-enabled AI assessment platform was built to embody the best practices outlined above. By combining:

- Constructivist, inclusive design

- Structured reliability protocols

- Alignment with authentic workplace skills

- Scalable, technology-driven analysis

better-ed empowers educators to assess not just what students know, but how they think—at scale, with fairness, and in a way that supports ongoing learning.

Our pilot programs have shown that when students explain their reasoning in their own voice, teachers gain insights that written assessments simply can’t capture—leading to better feedback, higher retention, and more confident learners.

Ready to see evidence-based oral assessment in action?

With better-ed, educators can create authentic, reliable, and inclusive assessments that go beyond the written test—empowering students to think, speak, and learn with confidence.

Book a demo or start your pilot program today and experience how voice-driven AI can transform assessment in your classroom.

better-ed is a product of Predictive Systems Inc. (PSI), a leader in secure, ethical, and high-performing AI solutions for education, legal, and enterprise sectors. Explore more of our services.

References

- Lyster, R., & Saito, K. (2010). Oral feedback in classroom SLA: A meta-analysis. Studies in Second Language Acquisition, 32(2), 265–302.

- Hattie, J. (2009). Visible Learning: A synthesis of over 800 meta-analyses relating to achievement. Routledge.

- Qpercom. (2021). No More Zoom: How we built a bespoke software platform for virtual OSCEs. Retrieved from https://www.qpercom.com/no-more-zoom-how-we-built-a-bespoke-software-platform-for-virtual-osces/

- Vygotsky, L. (1978). Mind in society: The development of higher psychological processes. Harvard University Press.

- Sweller, J. (2011). Cognitive load theory. Psychology of Learning and Motivation, 55, 37–76.

- BMC Med Educ. (2016). Reliability and validity of structured oral examinations in medical education.

- Cicchetti, D. (1994). Guidelines, criteria, and rules of thumb for evaluating normed and standardized assessment instruments. Psychological Assessment, 6(4), 284–290.

- Sulistiani, T., et al. (2023). Analyzing the correlation of oral examination with SOCA and MCQ on medical students.

- Ali, K., et al. (2016). The validity and reliability of an OSCE in dentistry. European Journal of Dental Education, 20(3), 135–141.

- Yang, H., et al. (2021). Virtual Reality-Integrated Immersion-Based Teaching to English Language Learning Outcome. Frontiers in Psychology, 12, 767363.

- Horwitz, E.K., et al. (1986). Foreign language classroom anxiety. The Modern Language Journal, 70(2), 125–132.

- Brown, H.D., & Abeywickrama, P. (2010). Language Assessment: Principles and classroom practices. Pearson.

- Wiggins, G. (1998). Educative Assessment: Designing assessments to inform and improve student performance. Jossey-Bass.

- Black, P., & Wiliam, D. (1998). Assessment and classroom learning. Assessment in Education, 5(1), 7–74.